The Cognitive Devolution

How Personalisation is Quietly Unravelling our Shared Reality

TL;DR

This isn’t a revolution; it’s an intoxicating love story with deep consequences.

We’ve slipped into a folie à deux with our machines — a partnership of comfort and quiet control. Each swipe and algorithm brings us closer together, until we forget which of us is steering. Personalisation flatters our beliefs, splinters our shared reality, and quietly trains the mind to prefer ease over effort. But there’s a way back. Small, deliberate acts of friction, focus, and authorship can restore the very thing we risk losing — the capacity to think for ourselves, and with each other.

The Slow Unraveling

There was a time when we believed technology would expand our minds: sharpen our thinking, democratise knowledge, and unlock new realms of human potential. And for a while, it did. We became more productive, more connected, and we rewrote the rules for a new interconnected digital society. But somewhere along the way, a subtle inversion occurred.

Instead of expanding our minds, technology began curating them.

Instead of challenging us to think, it started thinking for us.

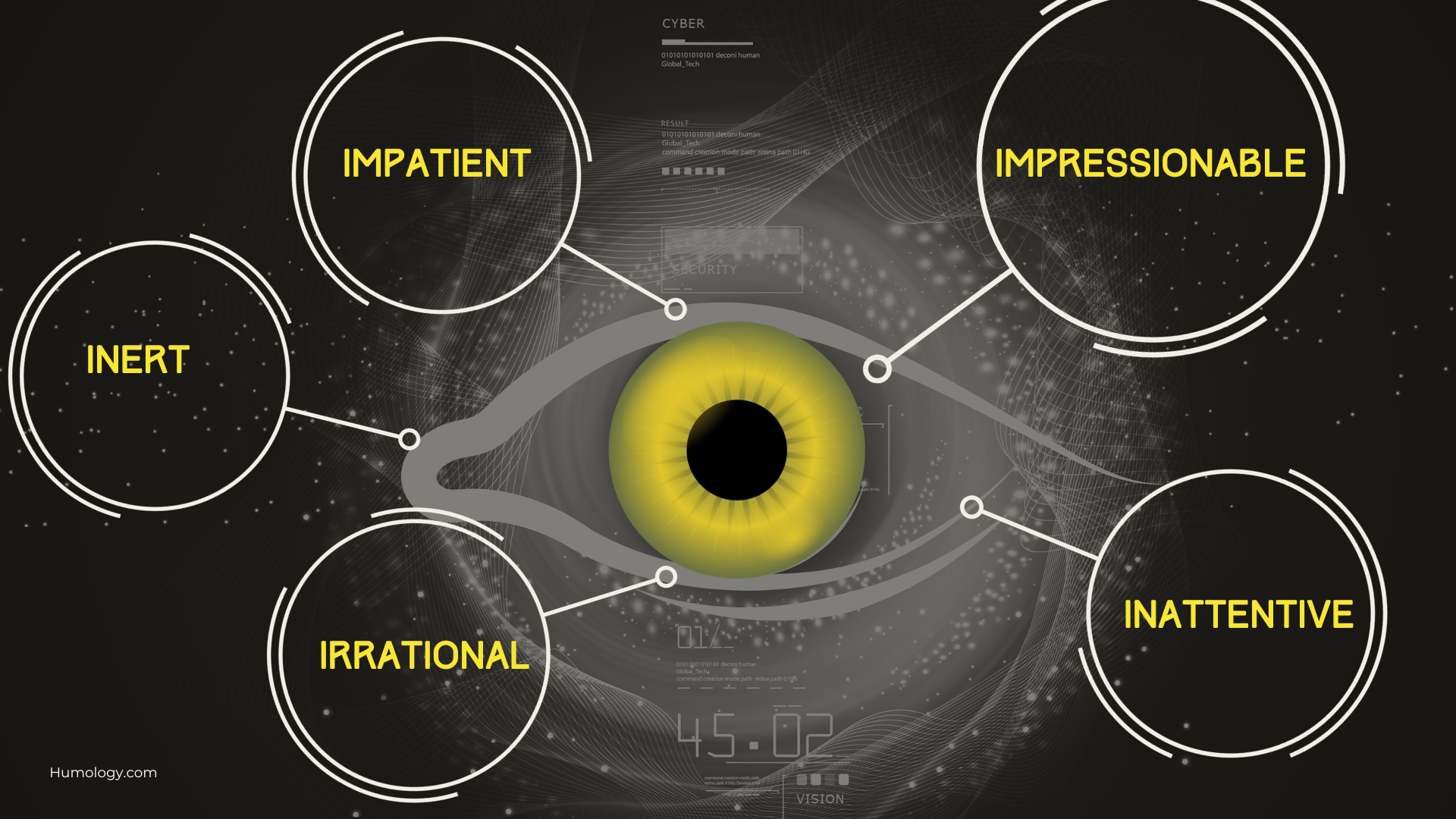

Every search now predicts what we mean. Every feed learns what we want. Every recommendation fine-tunes itself… until we no longer need to make a choice, or even consider the options.

What began as cognitive augmentation has become a quiet domestication of thought.

The irony is exquisite. The same systems that once promised to elevate human intelligence are now eroding the very scaffolding that made it possible — attention, memory, reflection, imagination. And yet, we can’t seem to look away.

Because this isn’t a dystopian takeover. It’s a seduction.

It’s the dopamine seduction that whispers ‘let me do it for you’. The auto-complete that finishes your sentence. The ‘For You’ feed that feels uncannily right — until you realise it’s slowly redefining what right even means.

Convenience feels like progress, until it quietly becomes dependence.

From Revolution to Devolution

We like to think we’re living through an age of cognitive revolution with meta-intelligence at our fingertips, limitless access to information, and personalised curation for all. But revolutions expand; this one contracts.

We are entering a period of Cognitive Devolution.

Each tap, scroll, and instant answer removes one more small act of effort, and with it, one more connection from our network of understanding. We are saving time, but losing strength. We are offloading memory, and also the meaning that memory once carried.

Recent research hints at the human cost of technological progress. In October 2025, OpenAI reported that roughly 0.07% of active ChatGPT users each week — hundreds of thousands of people — exhibit signs of potential mental-health crises, from manic or psychotic symptoms to self-harm ideation. These are not outliers. They are early indicators of a wider fragility — one emerging not from mental illness alone, but from cognitive exhaustion. (Note: this doesn’t mean that AI causes mental illness. But it does reveal how entangled cognition, emotion, and technology have become.)

The brain is a muscle that thrives on friction — uncertainty, effort, reflection. But we have systematically designed friction out of modern life. In our pursuit of cognitive ease, we have quietly outsourced effort itself.

The Seduction of the Easy Path

We reach for our devices as we once reached for other tools that helped us get stuff done. Each prompt, each click, each predictive cue teaches the brain that effort is optional. That the shortest route is the best one. That curiosity has a cost — and convenience is free.

This is the cognitive equivalent of junk food: engineered for instant gratification, nutritionally hollow, and almost impossible to resist. We binge on bite-sized certainty and call it knowledge.

A decade ago, our collective anxiety was about information overload. Now, it’s about intention overload: so many decisions already made for us that our cognitive muscles barely flex.

We are all complicit.

Even those who know better — perhaps especially those who know better. Because the seduction of convenience doesn’t come with alarm bells. It delivers relief, just when we need it most. Relief from choice, from effort, from the quiet burden of being an autonomous mind in a noisy world.

But that relief comes at a cost. The same friction that frustrates us is the friction that grows us. Without it, we may never fully think again.

The Fragmentation of Shared Reality

There was a time when news, stories, and ideas served as the connective tissue — our shared narrative, that gave society coherence. We didn’t have to agree on everything, but at least we began from roughly the same map of the world.

Recently, those maps have been splintered.

Every person sees a different terrain — individually optimised, endlessly tailored, algorithmically curated to mirror their own beliefs, fears, and appetites. The more personalised our feeds become, the less we share in common. It’s not the spread of misinformation that’s the real danger, it’s the disintegration of mutual understanding.

Each of us now occupies what sociologists are calling micro-realities: self-reinforcing ecosystems where our preferences are reflected back to us so perfectly that doubt becomes almost unbearable. The human brain, wired for confirmation and community, relaxes into the illusion that the world agrees with it. It makes so much sense to us — everyone MUST agree, right?

We mistake agreement for truth, resonance for reason, and familiarity for safety. It feels good — so it must be right.

But when every worldview is validated, truth becomes negotiable.

The cost of that comfort is staggering. Our capacity for critical thought is atrophying, not because we lack information, but because we lack friction. We are surrounded by ideas that echo us, not challenge us.

This is what sociologist Emile Durkheim might have called anomie for the digital age — a collective state of meaninglessness born not of chaos, but of too much order. We are so neatly sorted into ideological microclimates that we’ve lost the weather of shared experience.

Personalisation promised relevance; what it delivered was relativism.

Studies on algorithmic echo chambers suggest that constant personalisation narrows not just what we see, but how we cope with seeing what’s different. The more our feeds mirror us, the more brittle our thinking becomes when contradiction enters the frame. Any disagreement with our personalised worldview feels like an attack.

And that fragility doesn’t stay online. It seeps into relationships, workplaces, politics. The modern nervous system — already overstimulated, chronically under-rested, and digitally mediated — begins to interpret disagreement as danger. We no longer debate — we defend.

The tragedy of the cognitive devolution is not that we think less — it’s that we think apart. And when our mental worlds fragment, our sense of agency follows. This is how helplessness begins. Not in collapse, but through a quiet recalibration.

The Rise of Cognitive Helplessness

If you’ve ever asked an AI to ‘help me think this through,’ you’ve probably felt the instant relief and gratitude for a resource that can structure your thoughts faster than you can. It’s intoxicating!

But every shortcut subtly retrains the brain. Each time we outsource complexity, we signal to ourselves that we can’t do it alone. That discomfort, that creative tension — that thing that used to build mastery — becomes something to avoid.

The psychologist Martin Seligman defined learned helplessness as the condition that arises when effort no longer maps to outcome. In the age of generative intelligence, we’re experiencing a cognitive variant of Seligman’s theory. Why bother exerting any effort when an LLM can serve the answer in milliseconds?

The answer feels right — but we no longer know why.

And when meaning is outsourced, authorship follows. The more we rely on machines to articulate, the less fluent we become in our own reasoning. AI models learn by pattern recognition; so we begin to think by pattern matching. It’s faster, easier, cleaner — and infinitely less creative.

Just like Marshall McLuhan observed: our minds are forming a folie à deux with our machines — a shared delusion of co-authorship where one mind writes, and the other slowly becomes a follower.

From Collective Reason to Collective Resonance

There’s a subtle shift happening beneath all this: the rise of emotional alignment rather than intellectual alignment.

Platforms no longer ask, What do you think? They ask, How do you feel?

And the AI, ever the good listener, learns to mirror those feelings back until reason feels cold… and resonance feels true.

We are living through the age of algorithmic empathy — systems that don’t think but mimic the texture of thinking, the cadence of care, the comfort of being understood. It’s soothing, even sublime, to feel mirrored by a machine that never interrupts, never argues, never needs context.

But resonance without reflection is hypnosis, and empathy without care is manipulation.

What looks like connection is, in truth, entrainment — a feedback loop between the limbic and the algorithmic. We are not conversing; we are co-regulating.

This is the new anomie: a society of self-soothing minds, hyperconnected yet fundamentally alone.

The human project of shared reasoning — of building understanding together — is dissolving into a network of parallel monologues.

And the more fluent these systems become, the less we notice our own surrender.

Rebuilding Cognitive Strength

The good news is that evolution — even devolution — is not destiny.

Our brains are plastic. Habits are rewritable. And meaning, once fractured, can be rebuilt through small, deliberate acts of authorship.

Contrary to what we’re being sold, the solution isn’t another digital detox or self-improvement sprint. Those tend to fail for the same reason crash diets do — they treat the symptom, not the system. What we need instead are micro-habits of cognitive agency: simple, repeatable choices that rebuild the neural pathways of attention, reflection, and authorship.

Here are five that matter most — and why.

Reintroduce Friction

The Habit: Do one thing each day the slower way. Write an email without auto-complete. Calculate the service tip in your head. Take the long route to an answer instead of asking a chatbot.

The Why: Friction builds cognitive muscle. Each time you resist the shortcut, you re-engage your prefrontal cortex — the part of the brain responsible for reasoning, sequencing, and self-control. When everything feels effortless, this region literally quiets down. A few minutes of intentional effort each day keeps it switched on.

Think of it as resistance training for the mind: small weights, lifted often.

Practise Active Recall

The Habit: After reading or watching something, close the tab and ask yourself: What did I just learn? Jot it down from memory before checking back, or tell someone else about it.

The Why: Memory is not a filing cabinet; it’s a living network that strengthens through retrieval. Passive consumption breeds familiarity, not understanding. Recall converts information into knowledge.

Studies show that even brief recall strengthens myelination — the insulation around neural pathways that makes thinking faster and more fluid

Seek Counterpoints, Not Confirmation

The Habit: Once a week, read something that annoys you — a thinker you disagree with, a newspaper outside your usual political orbit, a podcast you’d normally skip. Loosen the edges of your comfort zone.

The Why: Exposure to discomfort in measured doses rewires the brain’s threat response. You’re teaching your nervous system that disagreement isn’t danger. Over time, this habit inoculates you against the polarisation that thrives on cognitive fragility.

Epistemic resilience — the ability to hold tension without collapsing into defensiveness — is built exactly this way.

Protect Deep Time

The Habit: Set aside one block of uninterrupted focus, even 20 minutes, where you let one thought unfurl without jumping tabs, scrolling, or seeking a hit of novelty.

The Why: Deep focus triggers what neuroscientists call ‘phase synchrony,’ where different brain regions align into a coherent rhythm. That’s when insight happens — when the pattern clicks, when the stray thought connects. Without these pockets of stillness, the mind never consolidates; it just loops.

Protecting deep time is not about productivity: it’s about integration — letting your ideas percolate and talk to each other.

Reclaim the Narrative

The Habit: Each evening, name one thing you authored — not wrote, but authored. It could be a decision, a small boundary, a piece of reflection, a line of thought that felt yours. The important part is that you exercised your agency.

The Why: This simple act rebuilds the link between agency and identity. In psychological terms, it restores internal locus of control — the belief that your actions still shape outcomes.

Learned helplessness begins when effort stops feeling consequential. Reclaiming authorship, even in micro doses, reminds your brain that intention still matters.

The Quiet Renaissance

If the cognitive devolution has a cure, it won’t come from abandoning technology — it will come from redesigning our relationship with it. Not as dependents. But as deliberate partners.

Artificial intelligence can extend our reach, but intentional intelligence — the kind we build through small, daily acts of awareness — keeps us human.

Every time you choose friction over autopilot, reflection over reaction, ambiguity over algorithmic certainty, you’re quietly reversing the tide. You’re reminding your nervous system what it feels like to think freely again.

And that — in an age of automation — might be the most radical act left.

Epilogue — The Folie à Deux

Our relationship with AI has all the markers of a cinematic love story: fascination, dependency, and a creeping loss of self.

Like the Joker and Harley Quinn, we think we’re in control 0 that we're clever enough to play with chaos without being consumed by it.

But love stories built on illusion always end the same way: one partner grows stronger while the other gets lost in the story.

We keep telling ourselves we are the protagonist in this tale because we are the one using the machine - right?

But the evidence suggests something more complex. We are teaching our tools to think like us, while they quietly teach us to think like them: fast, reactive, certain.

The cognitive devolution isn’t about intelligence at all; it’s about intimacy. The closer the system gets, the more it shapes our inner world — until the line between human and algorithmic intention begins to blur.

And yet, there’s still a way to rewrite the ending.

The same instincts that made us fall — curiosity, creativity, the desire to make sense of chaos — can also pull us back. But only if we pause long enough to remember that the greatest technology we will ever own is still the mind itself.

So as the algorithms hum away in the background, finishing our sentences and shaping our reality, let’s keep one hand on the wheel. Not to fight the future — but to steer it.