The Journey to Ethical Artificial Intelligence

Somebody once quipped that ethics is like the company dishwasher. Everyone is responsible for emptying it, and we all benefit from it. But the person who cares most about it will end up doing it! This is true of ethics but perhaps even more so for the more specific domain of ethics in artificial intelligence.

Ethics is a system of moral principles that affect how people lead their lives and make decisions. Ethics can provide us with useful

moral maps, or frameworks that can guide us through conversations on complex issues. Ethics is more than just 'being good’. It's about fairness, treating people and other entities with dignity and respect, and behaving in ways that don't harm others. Ethics in AI's concern, according to the EU, "is to identify how AI can advance or raise concerns to the good life of individuals, whether in terms of quality of life, or human autonomy and freedom necessary for a democratic society".

I define AI as machines acting to mimic human cognition to solve problems. The most common components are Machine Learning (ML), Natural Language Processing (NLP), and Robotics. AI has made huge strides in recent years due to the availability of increased computing power, including GPUs, the exponential rise in availability of big data and the development of new algorithms. In 1996 the world was amazed when

Deep Blue, an IBM supercomputer, beat Garry Kasparov, then world champion, in a game of chess. A short 20 years later, AlphaGo, a program from Google Deep Mind, defeated Lee Sedol, the strongest player in the world in the game of Go. This game originated in China thousands of years ago and was considered the Holy Grail for AI because of the infinite number of board positions. In recent years we have seen the phenomenon of large language models that can write articles, news reports, poetry and even produce computer code. The most prominent of these models are GPT-3, BERT and OPT-175B.

Parallel to the rise in the technical abilities of AI has been society's concern with whether an AI will ever become sentient or self-aware. We have come along way from ELIZA, the first chatbot developed at MIT in 1996 and intended to emulate a psychotherapist that fooled many people into believing she was human. MIT's Joseph Weizenbaum programmed ELIZA to respond to specific keywords in the typed input text. Earlier this year, a Google engineer, Blake Lemoine, claimed that Google's AI tool Lamda had become sentient.

All of the above leads to the natural conclusion that we need to focus more on AI's ethical implications in the coming years. In this article, I will consider AI ethics from four perspectives: individual, product or company, the tech industry, and society.

Individual

Whether you are a consumer using a product that uses AI or someone who is developing these tools, we all have a role to play in ethical AI. For those working in technology, I can suggest the trolley problem as a mechanism to start the conversation on their approach to ethical dilemmas. The trolley problem has been a mainstay of philosophy lectures for decades and is a thought experiment used to explore ethical dilemmas. In the scenario, a runaway trolley, or tram, is heading toward five people. You can pull a lever to divert the trolley onto a separate track, saving the five people but killing one person on the second track. How would you respond in this situation? Is doing nothing or refusing to make a decision the same as taking action? I think we can also see some obvious parallels to ethical choices faced by the AI industry when self-driving cars become commonplace.

Let's consider for a moment the example of a Machine Learning engineer who is gathering and building a data set as input into a machine Learning model. They are trying to determine how to abstract from reality, and 'abstraction from reality is never neutral, and the abstraction itself is not reality; it is a representation’1. They need to consider if the data set represents the community that it is being built for.

If you are an individual working on AI products, you might consider using the

Data Ethics Canvas from the Open Data Institute. This is a tool you can download and use for free. It provides considerations and questions for any data project under the headings of data, impact, engagement and process.

Project or Product

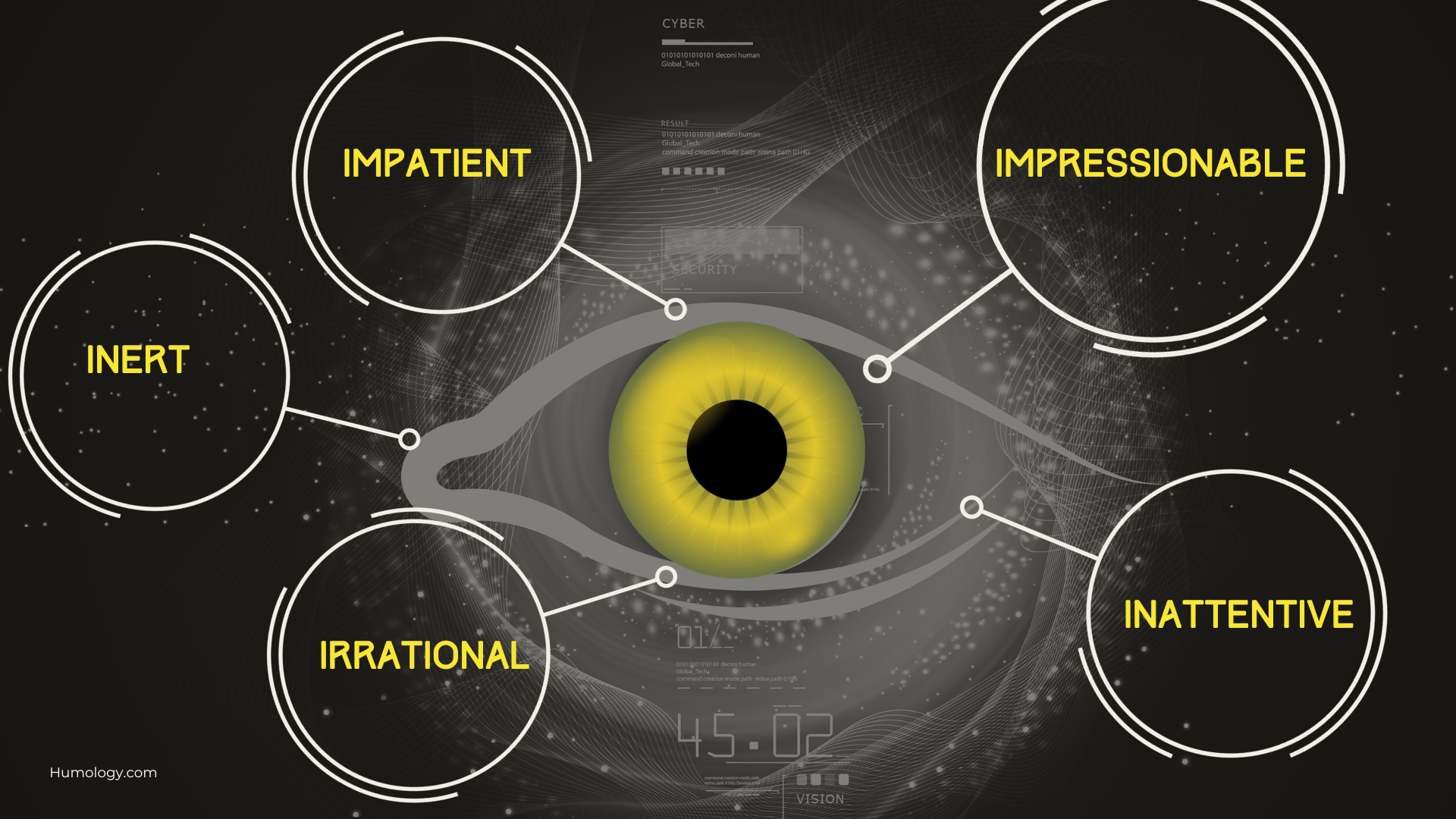

Those working in the Tech sector also need to consider the ethics of the project they are working on or the product being built. One of my favourite books from recent years is Nir Eyal's Hooked2. In this book, he presents his Manipulation Matrix, which allows 'entrepreneurs, employees and investors to answer the question; should I hook my users on this product'? Although aimed at a general business audience, I think there are obvious applications for those working in AI.

The Manipulation Matrix is a two-by-two matrix. On the X axis, we consider whether the maker would use the product; on the Y axis, we consider whether the product improves users' lives. We then see those creators of products can fit into four categories.

Facilitator (I think this is what most of us aspire to)

Something that you would use and improve user's lives, e.g. an online education tool, an app that helps you save money or a fitness tracker, device for measuring blood sugar or blood pressure.

Entertainer

If you use the product but can't claim it improves people's lives, it is probably just entertainment. And there certainly is a place for that; think Angry Birds or even Netflix.

Peddler

When pressed, would you use your product, even though it doesn't do any harm? Think advertising or even some fitness apps, as there are so many available.

Dealer

Interestingly, in the Netflix documentary - The Social Dilemma, some Big Tech execs freely admitted that they don't let their children use social media. If you wouldn't use your product or wouldn't want your children to use it and it doesn't improve people's lives, then you may be in this category. Think of casinos, big tobacco or online gambling. Do you think they fit into this category?

Those working in AI or big tech should consider where their project or product falls on the matrix. Does it make them feel proud? Does it influence positive or negative behaviours?

The Tech Industry

Big Tech is often guilty of using the approach that it is easier to beg for forgiveness than to seek permission. A great example of this was in 2010 when Facebook controversially changed the privacy settings for its 350 million users. Mark Zuckerberg once said, "we decided that these would be the social norms now, and we just went for it."

Can we afford to leave AI ethics up to Big Tech? I think not and feel that we all have a duty to ensure we have ethics in artificial intelligence. This includes citizens, legislators, organisations and employees working in big tech.

AI will have a massive impact on society in the coming years. It is up to all of us to ensure that it is a positive impact.

In Cathy O'Neill's Algorithms: Weapons of Math Destruction3, she discusses the impact of algorithms on society,e.g., those used for recruitment or loan applications. There is an inherent unfairness in how these algorithms are applied. "The privileged, we'll see time and again, are processed by people, the masses by machines”. Consider the example of applying for an entry-level job at Walmart in the US, where an AI algorithm will most likely screen you. Compare this to your experience as a senior executive who has been headhunted for a Wall Street position where you will most likely get the personal and human touch. Cathy O'Neill describes the three components of a WMD:

• Opacity

• Scale

• Damage

Opacity

Even if the person knows they are being modelled, do they know how the model works or how it willbe applied? Sometimes companies claim it is their secret sauce or IP or claims the black box effect.

Scale

Does the WMD impact one use case or population, or do they have the potential to scale exponentially and impact society?

Damage

Compare the impact of an algorithm that suggests an item to buy (Amazon) or a program to watch (Netflix) with an algorithm that determines whether you get a job, qualify for a loan or even the length of prison sentence you get.

At a broader level, some claim that the ethics of the business model of some big players in Big Tech needs to be examined.

In her book Surveillance Capitalism4, the author Shoshanna Zuboff discusses what she calls "behavioural futures markets," where surveillance capitalists sell certainty to their business customers, e.g., Google Ad Words. She describes this as "a new economic order that claims human experience as free raw material for hidden commercial practices of extraction, prediction and sales”. Zuboff sees this as a significant threat to modern society that she compares to industrial capitalism's impact on the natural world throughout the 19th and 20th centuries.

How the World is Responding

In November 2021, 193 countries adopted the first-ever global agreement on the Ethics of Artificial Intelligence at the United Nations. The EU formed the High-Level Expert Group on AI in Europe in 2019. Many tech companies, facing both external and internal pressure from employees, have begun a system of self-regulation and formed internal AI ethics initiatives. And some big players have drawn a line in the sand about what they will and will not do. For example, Google has claimed it will not 'sell facial recognition services to governments.

Case Study

Before I wrap up this article, let's look at a brief case study.

Facial recognition systems, in general, have led to wrongful arrests. Clearview trawled social media sites and obtained pictures of people without their consent. Recently, Ukraine has been using Clearview AI to vet people of interest at checkpoints and identify dead Russian soldiers' bodies so they can inform their families. So, overall, are they behaving ethically? Does the context matter? Is it an ethical product?

The importance of ethics in AI will only increase in the coming years as AI becomes even more pervasive. I believe we all have a role to play in ensuring that AI is a force for good in society. What role will you play?

References

1

Coeckelbergh, Mark. AI Ethics. Cambridge, Massachusetts, The MIT Press, 2020.

2 Nir Eyal, and Ryan Hoover. Hooked : How to Build Habit-Forming Products. Penguin, 2014.

3 O’Neil, Cathy. Weapons of MathDestruction : How Big Data Increases Inequality and Threatens Democracy. Broadway Books, 2017.

4 Zuboff, Shoshana. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York, Public Affairs, 2019.